A common complaint is that NIH’s peer review process can be overly conservative, because it is so focused on preliminary data guaranteeing that a project will work. That effect of peer review is probably even worse in today’s hypercompetitive environment, where it isn’t unusual for only the top 10% of grant applications to get funded. In that context, peer reviewers often look for any reason at all to keep a grant from getting funded.

This isn’t ideal for innovation. Pretty any scientific breakthrough that we care about will have at least a few naysayers at first — even after the work is published in final form, never mind before the work was even started.

There are many ideas to improve peer review, such as looking for grants with highly disparate ratings (some peer reviewers love it, some hate it), or giving peer reviewers a “golden ticket” that lets them guarantee funding to one grant no matter how anyone else votes. These types of reforms hold out the hope of letting through more innovative ideas.

But for now, there is a serious obstacle to any such reforms or even modest experiments: The HHS and NIH grant policies as applied by the HHS Office of Inspector General.

Here’s why I say that. The Office of Inspector General (OIG) recently put out a report titled, “Selected NIH Institutes Met Requirements for Documenting Peer Review But Could Do More To Track and Explain Funding Decisions.”

Hmmm, what does that mean? “Could do more”?

After going through some of the basic mechanics of how peer review works at NIH, the OIG notes that peer reviewers give all the applications a score; the applications are ranked; and any given Institute will have to draw a line somewhere (the “pay line”) based on what it can afford to fund that year.

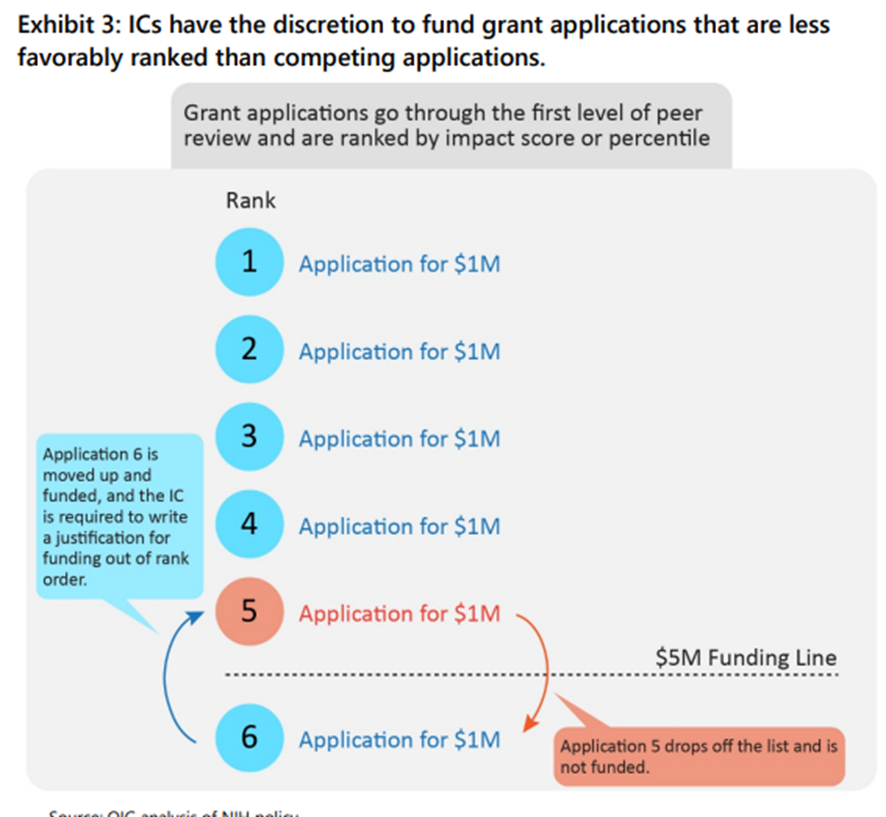

But herein lies the problem: Sometimes NIH officials don’t just automatically fund all grants above the pay line. Instead, they might fund a grant below the pay line instead of a grant above the pay line, as shown in this graphic:

So what, you might ask? According to OIG, whenever an NIH Institute or Center “decides to fund a grant application out of rank order, HHS and NIH policy requires the IC to write a justification for doing so,” and moreover, “HHS policy requires the justification to tie to factors documented in the funding opportunity announcement.”

In other words, funding grant applications outside of the strict order as determined by the scores given by peer reviewers is basically a huge no-no.

NIH can do it, sure, but only after writing up a long excuse for doing so, and the excuse has to correspond to what NIH wrote about the grant program ahead of time.

But why would NIH be required to obey peer reviewers so strictly?

Federal law states that NIH should use “technical and scientific peer review” as follows:

In the case of any proposal for the National Institutes of Health to conduct or support research, the Secretary may not approve or fund any proposal that is subject to technical and scientific peer review . . . unless the proposal has undergone such review in accordance with such section and has been recommended for approval by a majority of the members of the entity conducting such review, and unless a majority of the voting members of the appropriate advisory council . . . has recommended the proposal for approval.

Another federal provision states that peer review should be “to the extent practical, in a manner consistent with the system for technical and scientific peer review applicable on November 20, 1985.”

But the actual federal regulations for NIH directly state that “recommendations by peer review groups are advisory only and not binding on the awarding official or the national advisory council or board.”

Given this legal and regulatory context, why would we worry so much that an NIH Institute funded a few grants “out of rank order”? NIH doesn’t have to strictly follow the peer review rankings under the binding federal regulations.

At most, NIH might have to limit funding to awards that got “approval by a majority,” but it is quite possible to imagine the peer review groups giving a majority vote to upwards of 50% of proposals with the full awareness that some further system (e.g., a lottery, or program officer discretion) would then (necessarily!) have to be used to decide which were the 10% that actually got funded.

If NIH wants to experiment with different models of peer review—as the law and regulations actually seem to allow—HHS and its Office of Inspector General needs to get past such a narrow and restrictive interpretation.

It should be perfectly fine for NIH to say, “We took into account the peer reviewers’ scores, but we funded 50% of the grants in this round based on what our expert program officers thought was most worth funding,” or “based on the fact that some peer reviewers loved a grant while others hated it,” or “based on golden tickets given to each peer reviewer.”

Such innovations might be a great chance to fund more breakthrough science.

* * *

By the way, a couple of side observations:

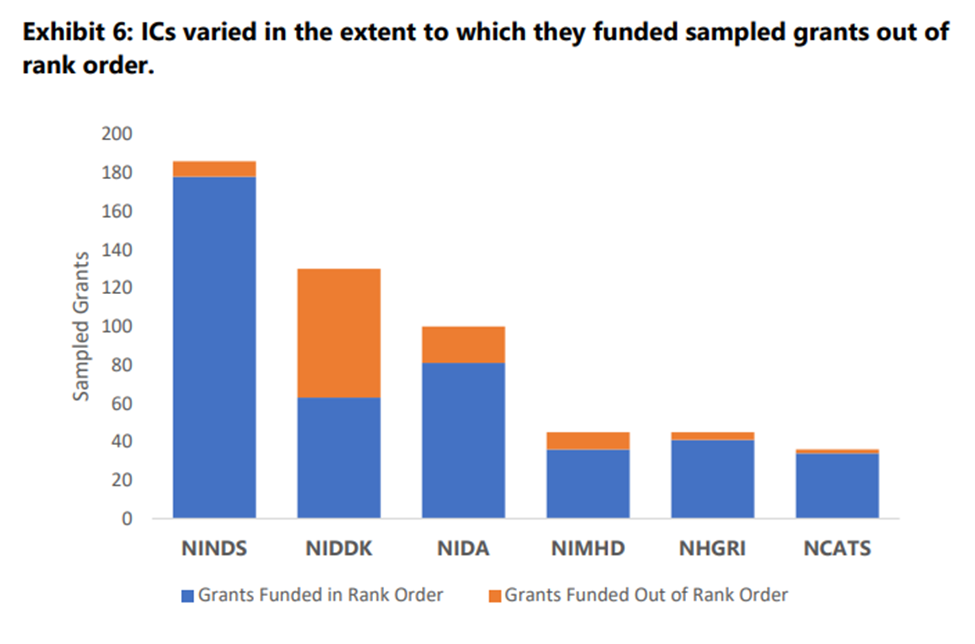

First, NIH Institutes varied widely in how often they funded grants out of order. For example, NIDDK seems to have done it about half the time, but NINDS barely ever did it. That in itself is interesting–and unexplained.

Second, we are only discussing 6 of the 27 Institutes and Centers here. Why? In OIG’s words, “NIH cannot easily determine the extent to which ICs fund grant applications out of rank order,” and even coming up with data on a mere 6 Institutes was a “time-consuming, manual process.” OIG says that this “raises questions” about whether NIH can “monitor and oversee ICs’ funding decisions.”

Not just that—it raises questions about whether the broader scientific community can learn anything about how peer review works, if there isn’t any good data outside of what was collected here.

External scholars should be allowed to access data from all of the relevant ICs. Then they can try to measure the impact of departing from peer review scores a lot (such as at NIDDK) or virtually never (some of the other institutes). That’s all part of figuring out how well peer review works.