My friend Vinay Prasad let me publish this as a guest piece in his newsletter today. Reprinting it here:

****

We are living in a time of growing distrust in science and scientific institutions. According to a 2022 Pew survey, “Trust in scientists and medical scientists, once seemingly buoyed by their central role in addressing the coronavirus outbreak, is now below pre-pandemic levels. Overall, 29% of U.S. adults say they have a great deal of confidence in medical scientists to act in the best interests of the public, down from 40% who said this in November 2020.”

At the same time, misinformation is everywhere. One recent paper actually found in a national survey that 37% of dog owners thought that vaccines might make their dog autistic.

Here’s the problem, and it’s true for science as much as it’s true for coworkers, spouses, or anyone else: Trust can only be earned, not demanded. And one of the most critical places where scientists, journals, and funders could earn that trust is by giving more prominence to replications and reanalyses that expose prior scientific errors.

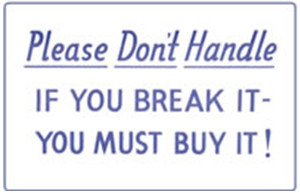

When scientists instead try to sweep such replications under the rug, they show that the public may actually be right to be skeptical of science. If scientific institutions want to earn the public’s trust, they need to adopt the Pottery Barn Rule: You break it, you buy it. In other words, if you’re a journal editor who published a substantially erroneous article, you have an obligation to publish replications, reanalyses, and criticisms. Same for funders.

When scientific institutions are more deliberately self-correcting, they will earn more public trust.

***

Early in the psychology replication crisis, one of the most scrutinized articles was by Cornell researcher Daryl Bem, who claimed that he had proven that humans had precognition. For example, he asked undergrads to look at two sets of curtains on a computer, and predict which one hid a pornographic image—notably, the true answer was randomly chosen afterwards. The response rate should have been 50/50, but students purportedly made the “right” choice 53 percent of the time, indicating that they supposedly had a slight chance of predicting a future event.

Stuart Ritchie and his colleagues tried to replicate one of Bem’s experiments. Unsurprisingly, they found no effect.

But journals weren’t interested in their “failed” replication. Even the editor (Eliot Smith of Indiana University) of the Journal of Personality and Social Psychology (which had published Bem’s paper on precognition!) turned down Ritchie’s replication. Smith wrote: “This journal does not publish replication studies, whether successful or unsuccessful.” Ritchie et al. eventually published their results here.

One might hope that this failure of journal ethics is a matter of the past. By now, surely every journal editor knows that replications are a key part of science, and there is no justification for refusing to publish replications altogether.

Not so, unfortunately. One major health journal recently refused to correct major errors on the grounds that it doesn’t publish replications.

As background, a 2018 article in Health Affairs tried to look at the issue of how to best communicate with patients after a problem or adverse event; the paper studied what happened before and after a new communications program was implemented at 4 Massachusetts hospitals.

A few researchers (Florence LeCraw, Danial Montanera, and Thomas Mroz) found no fewer than three major errors in the 2018 article. For example, the original article made an elementary statistical error—it said that the treatment group did better than the comparison group not based on a comparison of the two groups against each other, but based on a comparison of the treatment group against its own baseline. This error (known as difference in nominal significance) is a common but serious error that has led to correction or retraction of articles in the past.

Yet a senior editor at Health Affairs said that “their policy is to not publish replication papers even when peer review errors are identified since their audience is not interested in this type of paper.”

To make matters worse, these same researchers heard the same message from other journal editors:

At the 2022 International Congress of Peer Review and Scientific Publication, four editors-in-chief of medical journals told one of us that they also did not publish replication studies. They all said they were concerned that publishing replication papers would negatively affect their impact factors. That editorial boards’ main concerns seem to be monetary or visibility-related, as opposed to improving science or potentially correcting significant errors, is deeply troubling.

This is a failure of responsibility on journal editors’ part. When a journal publishes an article with elementary statistical errors that invalidate its findings, the journal has a responsibility to publish the truth in at least a prominent way as it published the original mistake.

In some cases, to be sure, a letter to the editor might suffice for minor corrections, and journal editors obviously have the responsibility to make sure that a failed replication is indeed accurate and important enough to publish.

But in the case of failed replications that expose obvious errors in the original article, a short letter will likely be inadequate to address the journal’s earlier mistake. We all know that such letters won’t be as widely read, and the original article will probably still be cited and read nearly as often (indeed, in psychology, the publication of a failed replication only makes the citation rate of the original article go down by 14%, and another study even found that non-replicable papers are cited at a higher rate than replicable papers).

What these medical and health journals are saying, however, is that they place a higher priority on citations and audience interest, even if it occasionally means allowing an undisputed error to stay in the scholarly record.

Put another way, they prefer popularity over truth, when the two are in conflict.

This is not a respectable scientific position, nor does it deserve public trust.

Communication versus Denial

Sometimes hospitals and doctors make mistakes in caring for a patient. Sometimes the patient even dies as a result. A key question is how the hospital should react afterwards.

One traditional approach can be called, “deny, delay, defend.” In other words, the hospital takes an approach driven by concern for legal liability, and therefore refuses to admit any wrongdoing, delays any resolution of the case, and is primarily defensive in its posture.

The problem with “deny, delay, defend” is that it almost certainly raises the risk of continued medical errors in the future. After all, the hospital can’t restructure the way it handles surgeries, or fire a negligent doctor, if it is simultaneously claiming refusing to admit to a mistake

A better approach focuses on communication and candor. The hospital and/or doctor tries to give full information to the patient or their families, to apologize for any medical error, to say what will be done in the future to prevent such errors, to compensate the patient, and more. There is growing evidence that such an approach leads to better results all around.

Scientific journals could learn from the experience of hospitals: when a mistake has been made, it’s better to communicate fully about that error and try to prevent errors in the future, rather than deny and defend.

The Pottery Barn Rule

Over 11 years ago, Sanjay Srivistava wrote an immediate classic on how journals should think about replications. He said they should live by the Pottery Barn Rule: “once you publish a study, you own its replicability (or at least a significant piece of it).”

As Srivistava says: “This would change the incentive structure for researchers and for journals in a few different ways. For researchers, there are currently insufficient incentives to run replications. This would give them a virtually guaranteed outlet for publishing a replication attempt.”

Next, “a system like this would change the incentive structure for original studies too. Researchers would know that whatever they publish is eventually going to be linked to a list of replication attempts and their outcomes.”

As well, such a rule “would also increase accountability for journals. It would become much easier to document a journal’s track record of replicability, which could become a counterweight to the relentless pursuit of impact factors.”

***

What should be next steps in order for scientific institutions to stop disincentivizing replications? How can they instead act so as to deserve more public trust?

Here are some ideas:

* The Committee on Publication Ethics (COPE) could revise its official guidance for scholarly journals on “post-publication discussions and corrections.” Specifically, the guidance should affirmatively state that an outright ban on direct replications is an ethical violation, per se. Moreover, replications pointing out serious/substantive clarifications in a published piece must be published with the same level of prominence as the original piece.

* TOP Factor—the effort to rate journal performance on the TOP Guidelines—could rate more medical journals, and when journal policies do not mention replication, someone could contact the journal to see if they will publish replications or not.

* Funders like NIH could offer a bounty to scientists and data analysts who perform replications of prior NIH-funded scientists in a way that points to prior errors or outright fraud. The bounty shouldn’t cover the full costs of redoing experiments, because that might incentivize people to do unnecessary replications with an incentive to find mistakes where there were none. But when someone takes the time and trouble to do a replication, and does in fact discover errors or fraud, they should be at least partially recompensed for their time and trouble.