Science funding agencies often have programs explicitly aimed at funding high-risk, high-reward science. But the role of peer review in such programs is an open question. After all, in a competitive environment, a consensus approach to peer review might mean that it is difficult to fund anything that is actually very risky. Any high-risk project might have a few naysayers, after all. Thus, those programs can often fall short without significant cultural change as to how peer review works—or whether peer review is even used at all.

Agency staff often fall prey to “apple pie positions” — i.e., beliefs that seem unquestionable (such as, “science means that you use peer review”).

Even when an official policy tells agency staff to hand out grants without using peer review, they might not be willing to use that newfound authority.

***

Here’s a lesson from the NSF and its history of trying to make “high risk” grants outside of what it calls merit review (I’ll still call it “peer review”). The NSF Small Grants for Exploratory Research program (or SGER, often pronounced “sugar”) was active from 1990 to 2006, and gave NSF staff the authority to fund fairly small and short-term grants without going through the normal peer review process.

The idea was that program staff might find exciting and innovative opportunities in their field, and that they should have the flexibility to fund such grants with less bureaucracy and internal vetoes.

That didn’t happen anywhere near as often as expected. An evaluation of SGER grants found that the program was “highly successful in supporting research projects that produced transformative results as measured by citations and as reported through expert interviews and a survey.” So far, so good.

However, the “funding mechanism was about 0.6 percent of the agency’s operating budget, meaning that the programme was operating far below the 5 percent of funds that could be committed to this activity.”

In other words, NSF staff could have used their authority to make SGER grants at least 8 times more often.

What happened next? As of 2009, the SGER grant mechanism was replaced by two separate grant mechanisms: EAGER (EArly-concept Grants for Exploratory Research) and RAPID (despite the all-caps, RAPID doesn’t stand for anything other than “Rapid Response Research”).

RAPID grants are intended for “proposals having a severe urgency with regard to availability of, or access to data, facilities or specialized equipment, including quick-response research on natural or anthropogenic disasters and similar unanticipated events,” while EAGER grants are for “exploratory work in its early stages on untested, but potentially transformative, research ideas or approaches,” including “work may be considered especially ‘high risk-high-payoff’ in the sense that it, for example, involves radically different approaches, applies new expertise, or engages novel disciplinary or interdisciplinary perspectives.” The NSF is careful to point out that the EAGER mechanism can’t be used for grants that would be eligible for other lines of NSF funding.

As of the 2009 launch, NSF started using RAPID and EAGER together at a somewhat higher rate than SGER had been used before. From a 2016 report:

Nonetheless, the number of awards still wasn’t that high—nothing close to 8x.

As reported in Science in 2014, “the 5-year-old [EAGER] program doles out only one-fifth of what some senior NSF officials think the foundation should be spending on EAGER grants. … The answer seems to be the absence of outside peer reviewers—generally considered the gold standard for awarding federal basic research grants. Many NSF program officers seem to be uncomfortable with that alteration to merit review. And so a mechanism designed to encourage unorthodox approaches is languishing because it is seen as going too far.”

Did things change after that article? Not really.

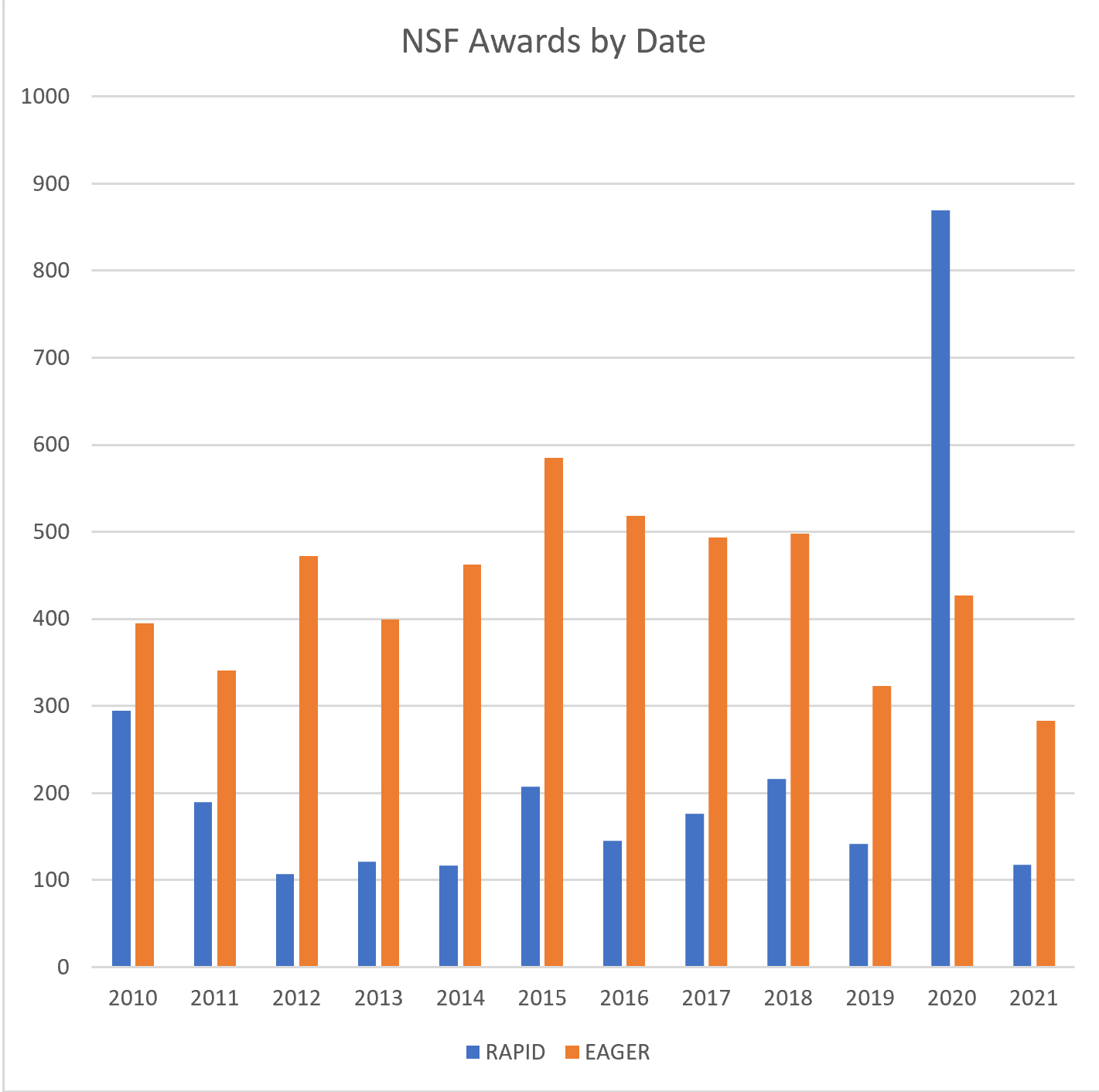

According to the most recent data available, here are the numbers of awards for RAPID and EAGER grants through 2021:

As you can see, there was a huge spike in RAPID awards in 2020, indicating that the NSF responded to Covid very quickly at the behest of Congress. Indeed NSF was several months quicker than NIH in this regard, as an excellent report from the Institute for Progress recently pointed out.

Nonetheless, the spike in RAPID grants seems to be a one-year anomaly. By 2021—far before Covid was over—the number of RAPID awards dropped to a level not seen since 2014.

Meanwhile, the number of EAGER grants was basically unaffected by Covid—even in 2020, the number of EAGER awards was lower than it had been in most years from 2012-18, and in 2021, the number of EAGER awards was the lowest ever since the program was fully rolled out in 2010.

Why might these well-intended programs be so underused, with the exception of RAPID grants in one year (2020)? Why would NSF officials tell Science that program officers are nervous about making grants outside of peer review?

***

Let’s look to a theory from a respected thinker in Silicon Valley (Shreyas Doshi, who has worked at Stripe, Twitter, Google, and Yahoo):

Apple pie positions.

What does that mean? It’s like the cliche “motherhood and apple pie”—things that everyone likes, or least that no one wants to publicly criticize. Apple pie positions are ideas or statements that seem like common sense in an organization, and that no one can argue against, but that can often have perverse results in that they can bog everyone down in bureaucracy and stagnation.

Doshi gives several examples here and here. Imagine a business meeting where an important topic is being discussed. Everyone seems near a decision, but then someone who is trying to look intelligent says, “Don’t we need more executive buy-in on this idea?”

That’s an apple pie position, according to Doshi. Who is going to oppose executive buy-in at that point? After all, what if the main VP or CEO hears that you spoke up in a meeting to say that their input wasn’t needed?

So everyone sits back and waits for the VP or CEO to be briefed in further meetings. Who knows what will occur next, or how long it will take. As a result, an important decision is held up in order to have further meetings that may not have been necessary. If that happens time and time again, the business will lose the ability to act quickly and decisively.

Another example from Doshi: Someone speaks up in a meeting to say, “We need more data to know how to create good success metrics for that.”

Apple pie position. No one in a meeting wants to speak up against having more data or against success metrics. But again, the result is that the decision is derailed— perhaps indefinitely—until people have a whole separate series of meetings about what data is available, or what success metrics would be, and who is supposed to track them, etc.

That might be a good idea in some cases, but not everything needs success metrics. To quote Doshi, “I’m not saying data is bad, but I am saying that oftentimes these questions that are essentially aimed at making us look smart . . . are unnecessary. In fact, if we address them, we might actually be harming the mission, rather than helping it, because the fact is, you cannot produce data for every single thing.”

***

How might apple pie positions look at a science funding agency like NSF?

Consider the longstanding practice in which everything gets run through a rigorous peer review process. Peer review is often written into federal law, and everyone seems to think it is a sine qua non of science (even if it’s arguably not).

Along comes a program that says you—an NSF employee—can fund some grants outside of peer review.

To you, this authority seems unprecedented, and you’re not sure how you’ll be judged by your own bosses or peers if you go all out. All it takes is for one colleague to say something like this: “Hmmm, are you sure about not running that grant through peer review? Some people in the field might be skeptical.”

(In fact, all it might take is just imagining such a reaction from a colleague.)

Given the context and history, peer review is an apple pie position in places like NSF and NIH. Few people on the front lines of a science funding agency would be willing to take a bold stand against peer review.

The result is what NSF officials told Science: program officers don’t want to take the personal risk of ignoring peer review while funding “high-risk” research.

To me, there’s not much point in establishing high-risk, high-reward programs, or in creating mechanisms to circumvent peer review, if agency staff are too nervous to use their authority very often.

The true “apple pie position” needs to be flipped. That will only happen if agency leadership bend over backwards to assure staff that not only are they allowed to use these funding authorities, they are expected to do so. Agency leadership should give internal speeches about how giving grants outside of peer review is a wonderful thing. They should try to create a culture wherein every meeting as to “rapid” grant programs involves questions like this: “Why is anyone proposing such-and-such process? That will take an extra 4 weeks. Shouldn’t we do anything possible to get these grants out the door more quickly?”

Otherwise, even well-intentioned policies as to agency flexibility and “high-risk” research will run aground—agency staff will think the apple pie position is still to stick with the traditional approach.