Introduction

DARPA’s customer relationships and the individual agency of its PMs are possibly the two biggest differentiators of the DARPA model from bigger budget science funders like the NSF and NIH. Even in DARPA’s most basic research funding decisions, the question of “how can this be used by the military services?” finds its way into the discussion. In some generations of the organization, being “useful to the military services” is interpreted quite broadly, with justifications such as, “what’s good for the nation will be good for DARPA.” This approach was often taken by DARPA’s Information Processing Technology Office (IPTO) in the 1960s. Arthur Norberg and Judy O’Neill — who wrote an in-depth history of IPTO from its earliest days, including interviews with prominent individuals such as Robert Taylor — summarized the priorities of early IPTO directors as follows: “A contribution to the country which included a contribution to the military is what IPTO directors sought.” With work such as the development of VLSI computer chips, that ethos has often proved to be an extremely correct heuristic.

However, in different generations of the organization — whether driven by wartime, fiscal austerity in DC, or the preferences of individual directors — being “useful to the military services” has been interpreted more narrowly. The first prominent example of this is DARPA under Director George Heilmeier. Another example of an era of DARPA where this goal was interpreted more narrowly was during Tony Tether’s years at DARPA as Director — starting in 2001. In this era, Tether went as far as to make many funding decisions contingent on a specific armed service putting in a budget wedge to continue developing the project past a certain stage. Neither approach is right or wrong. ARPA-like orgs often need to be able to adapt to changing situations like these.

Regardless, whether your customer is the US military, medical patients, or a certain subset of academics, all ARPA-like orgs serve some customer. This piece will dive into how DARPA’s Strategic Computing Initiative (SC) served its military customers in the 1980s — an era in Washington DC that demanded DARPA justify most of its spending as having clear, near-term value to the armed services.

Context

The three projects covered in this piece are all examples of DARPA’s attempts to find early military use cases of the exciting advances that had been coming out of their computing portfolio since the 1960s. Some pieces of the IPTO portfolio clearly followed Robert Kahn’s — who headed IPTO at DARPA — vision of “pushing” technology out into the world to find applications. Meanwhile, other areas of IPTO’s portfolio relied more strongly on using projects to stimulate demand “pull” for technological capabilities.

Robert Cooper — the DARPA Director at the time — was the counterbalance to Kahn and his beliefs. The program that Kahn envisioned was, put plainly, not considered sellable in DC. To sell the program, Cooper helped center the pitch around three new military systems that would result from the program if its individual research programs panned out in a moderately successful way. The three early descriptions of the applications became the centerpiece of the report that pitched SC to Capitol Hill. These applications were meant not just to provide the non-technical politicians a clear sense of what they were paying for, but, also, Cooper wanted to use the applications to help steer IPTO’s research funding in what the SC management hoped would be a coherent and politically explainable direction.

Beginnings of All Three Programs

From an intellectual standpoint, Khan was considered the figurehead of DARPA’s 1980s Strategic Computing Initiative. The initiative was meant to expand on the prior decades of computing research breakthroughs as well as find useful military applications for the growing body of work. However, it would be DARPA Director Robert Cooper — who had a Ph.D. background but favored industry-focused, goal-oriented engineering research more than Kahn — who would succeed in selling the program to Washington. A large part of the reason he proved to be a better fit for this sales task than Kahn was because he, more than Kahn, was willing and able to outline specific application technologies that SC’s technology portfolio could contribute to once all the work had been done. Khan felt that technological development was uncertain and that doing this was something of a fool’s errand. Cooper also seemed to understand that what they did at DARPA was an uncertain enterprise, but, also, that if DARPA wanted an expanded computing budget, then this was the kind of pitching that DARPA would need to do.

Roland and Shiman — who wrote a history of SC and interviewed many of its key players in the process — described Cooper’s thought process:

“Technology base” sounds fine but it does not mean much to those outside the computer community. Just what will that technology base allow you to do? What is its connection to the real world? How will it serve the defense mission? What new weapons and capabilities will it put in the hands of the commander in the field? These are the real metrics that allow you to measure the worth of such an investment. And any such goals have to be time specific. It is not enough to say that a robotic pilot’s assistant will be possible at some time in the future; there has to be a target date. Between now and then there must be benchmarks and milestones, metrics of progress demonstrating that the program is on course and on time.

Clinton Kelly, who originally joined DARPA as a PM in its Cybernetics Office before moving to the Defense Sciences Office when Cybernetics shut down, was asked by Robert Cooper to help define the applications efforts. Kelly, an engineering Ph.D. who had also run a company related to automating intelligence decision-making, had been funding a variety of somewhat odd mechanical machines while at DARPA — such as a robot that could do backflips. Kelly was known to be a great technology integrator and organizer, so he was very well-suited to the task Cooper had assigned him. The three applications that Kelly helped outline incorporated what Kelly and others saw to be the likely capabilities that would come from Kahn’s technology base and would intrigue the military services. The three major SC applications that Kelly helped jumpstart through his work on Cooper’s report were Pilot’s associate, Battle Management, and Autonomous Land Vehicle.

Progress towards these three applications would often be used to help steer and benchmark the program in the coming years. Many of the benchmarks included in the original SC report — such as real-time understanding of speech with vocabularies of 10,000 words understandable in an office environment or 500 words in a noisy environment — were set with the needs of these applications specifically in mind. Stated goals like helping a fleet commander plan for multiple targets or providing the military the ability to send an unmanned reconnaissance vehicle across a minefield helped ground the vision of the program to its funders and customers who were less likely to see the importance of the stale computer benchmarks — such as those used in the computer architecture section of the SC write-up.

While Khan eventually relented, he still saw these applications as Cooper imposing a NASA-style of operations on DARPA. NASA focused on working towards challenging point solutions rather than broad, flexible technological development — as IPTO historically had. As the years went on and work progressed, work on these projects proved that Kahn and Cooper both had good points. Regardless, the applications proved successful in helping DARPA win over an expanded computing budget for SC. In 1983, work on the program commenced.

Pilot’s Associate Operations

The Pilot’s Associate (PA) application was meant to assist aircraft commanders in all cockpit decision-making and planning that could reasonably be augmented by sensors and then-modern computing. The PA planned to incorporate the new hardware, AI/software, and natural language understanding capabilities expected to emerge from DARPA’s funding of the computer technology base. DARPA hoped that the PA would eventually help the aircraft commander perform tasks like preparing and revising mission plans. They hoped for the system to be small enough to fit into a cockpit and outfitted with a natural language understanding system so a pilot could deliver some verbal commands while they used their hands to operate the aircraft. The PA would be a “virtual crew member.” There were even comparisons, at the time, to the system functioning as a pilot’s own R2-D2 unit — although others in the military pushed back on this specific analogy.

Once SC was announced, DARPA’s first step in working on the PA application project was contracting Perceptronics — a research company that DARPA was currently using as a contractor for its SIMNET computerized combat simulation training project — and other contractors to carry out exploratory studies in the area. These studies were meant to more concretely outline how the system would work, as the description of PA in the original SC plan was quite vague. These studies established that, most likely, five separate “expert systems” could work in concert to carry out the functions of the final PA application.

In the era of strategic computing, “expert system” was a common term to reference software programs — often built by combining the efforts of programmers and specific domain experts — which used codified sets of rules to help carry out tasks in a faster and more accurate fashion. In their simplest forms, expert systems might just be made up of meticulously constructed “if, then” rules. Expert systems were, at the time, considered a branch of AI research — albeit a less exciting one than more generalizable AI systems to AI research purists. However, in an era when generalizable AI was often not living up to any level of commercial promise, expert systems programs, which nowadays would simply be considered “software programs,” were proving interesting to the military and large companies alike. These programs showed real promise in bringing the fruits of the AI research community to a wide variety of sectors and customers. Unlike more pure AI systems at the time, expert systems work had actually even proven profitable in the private market.

So, it is not surprising that PA’s project planning was going to rely on the use of the more application-ready expert systems paradigm. In the case of PA, the preliminary studies determined that the five specific separate expert systems that would make up the final software of the machine would encompass the following areas: mission planning, systems status, situation assessment, tactics, and front-end communication with the pilot. As the PA project went on, most of the direct planning for the PA system was done by scientists and engineers at the Wright Aeronautical Laboratory at the Wright Air Force base in Dayton, Ohio. The Air Force’s excitement to largely take the reins of the project from its early days was likely due to the fact, in the years leading up to this point, the Air Force had begun developing its own labs to explore the application of AI to Air Force matters. Major Carl Lizza, who had a masters degree in computer science, was the program manager on Wright’s end for much of the project. On DARPA’s end, John Retelle was the program’s PM until 1987, when another DARPA PM took over. With high level buy-in and excitement from the Air Force, minimal direction was needed from DARPA on the direct development and implementation of the PA system. The customer buy-in from the Air Force was a quite positive sign for DARPA.

Following the study stage of the project, the implementation of the PA program began in 1986. McDonnell Aircraft Company was the prime contractor for the air-to-ground system. Three total companies made up this portion of the contract. Lockheed was the prime for the air-to-air system. Eight companies and university research teams were on that contract. McDonnell was awarded $8.8 million (~$23 million today) from DARPA and Lockheed $13.2 million (~$38 million today). Each company was also willing to cover 50 percent of the costs of the development work because they felt working on the contract would give them an advantage in bidding on the big-budget contract for the Advanced Tactical Fighter which would be awarded in the coming years. These performers would look to take advantage of the equipment and methods that had been coming out of the DARPA portfolio as well as industry in recent years — employing LISP, Symbolics, and Sun machines as well as many of the new expert systems development methods which DARPA often funded.

The project would also make heavy use of expert boards in planning its work schedule as well as work with the customers in developing successive versions of prototypes. As is common in military R&D applications projects, the team leveraged expert advisory boards from academia and industry on an ongoing basis. Help from these sorts of boards seems less common when making small decisions in projects further away from implementation — such as those made in the Defense Sciences Office in comparison to the systems technology offices. Also, as this was absolutely a product for a paying customer, the performer teams heavily involved the users of the eventual product throughout the design process. In fact, much of the development work by the industry contractors looked quite similar to modern software development to modern readers. The project relied heavily on the rapid prototyping approach to managing its workflow and benchmarking its progress. Several demos were set to happen in the first three years of the project that would prove the system’s function and value. After several successful demos and the proof of concept were completed, the work could then progress to doing the development work to ensure that the system worked as needed in live combat situations. From a high level, that was how the project was meant to progress.

Non-PA, but PA-relevant research and engineering work that was in earlier technology readiness stages was overseen by other PMs in the SC portfolio. This bit of bookkeeping is of practical importance to how the projects were managed. The PA-relevant work on the broader technology base — that was still related to the application and might, at some point, make it into the PA system — generally fell to PMs in IPTO. These PMs working on other pieces of the broader portfolio did not “answer to” the PMs of the PA, Battle Management, or ALV applications, in spite of the importance of those three projects to SC. The work in these other PMs’ portfolios, of course, was meant to prove useful to projects like the PA. However, the PA team did not have any direct power to influence other PMs’ priorities to be more in line with PA’s direct needs. The PMs in the broader SC portfolio were meant to help develop better component technology to help projects like PA in the future, but they were generally able to decide on the best possible deployment of their own portfolios.

As the PA project was in its early planning stages, it seemed clear that a sufficient level of expert systems knowledge and experience was generally available to make an application like PA feasible at the time. But at least two notable technical risks existed that the PA team was relying on developments from the broader SC portfolio to overcome. The first risk was the need for a computer to be developed that was powerful enough — and small enough — to make the system work in real time. It was not going to be clear exactly how much more powerful a computer would need to be to make the PA work until more expert systems development was done by contractors on the project. But it seemed clear that a computer about an order of magnitude more powerful might be necessary before the system could be considered deployable in a cockpit. The second area of notable technical risk was the need to develop a natural language understanding system that could process a basic vocabulary of at least 50 or so words in noisy, cockpit-like conditions. This area of technical risk was likely less important than the issue of computing power, but it was still quite important if the system was going to work the way those in charge of the program wanted it to.

In spite of there being notable areas of component technology that required improvement, Director Cooper felt it was important that the SC applications programs begin roughly at the same time as its work developing the broader technology base. Politically, this increased SC spending package was meant to be about applications. Waiting years to start working on the applications projects was not really an option. So, work on the three applications projects began, more or less, right after SC was announced. The SC team, however, did go out of its way to ensure that the more basic research projects got off and running sooner rather than later. Meanwhile, the applications projects often had study stages and preliminary planning periods that may have taken a year or two. I do not get the sense that DARPA made similar attempts to speed this process along the way they did to ensure that computer architecture researchers, for example, got the machines they needed for their research sooner rather than later.

In March 1988, the first major operational milestone for the PA project was hit when the Lockheed Aeronautical Systems Company successfully demonstrated the system it had developed in a non-real-time cockpit simulation. In this demo, the system successfully performed flight tasks like identifying and dealing with a stuck fuel valve, creating a new mission plan as the situation changed, advising the pilot on evasive action, and deploying flares. The machine was able to complete the simulation’s 30-minutes worth of real-time computations in 180-minutes — meaning, in this early stage, the system was about one-sixth as fast as it needed to be. The slow performance was not considered behind schedule by any means. Firstly, much of the software was not even run on the most powerful machines possible at this stage. Additionally, in the early internal writings on the project, it was believed that by around 1990, some parallel computing technology from the DARPA architectures portfolio would be advanced enough to transition the expert systems to run on those machines.

This bit of planning is quite interesting. Those planning SC’s applications very clearly understood the pace at which computing hardware and architecture was advancing in these years and incorporated a level of anticipation of that progress into their planning. For context, in this period the BBN Butterfly computer — which was a coarse-grained parallel processor and not so powerful yet — was one of the few operational parallel architectures. Massively parallel architectures were still in their very early stages at the time SC was getting underway. By 1993, those at the helm of SC felt that small computers would be able to run the types of systems required to operate the PA in real-time and then some.

It was the job of the other PMs in the SC portfolio to seed investments and help orchestrate progress via the performer community to make this projected computing progress a reality. I will briefly give the reader an idea of what some of this work looked like. In the period between the mid-1970s and mid-1980s, technological development was occurring elsewhere in the hardware section of the SC portfolio and in industry that would end up becoming far more famous than the PA project itself — but that would, of course, enable many projects like the PA to become a reality in the coming decades. Some of this progress was funded by industry on its own, some by government and DARPA dollars, and often both. While NASA and the Air Force were buying chips from the young Fairchild Semiconductors, DARPA money had been finding its way to Fairchild through projects like the ILLIAC IV (covered in the FreakTakes Thinking Machines piece). Texas Instruments was hard at work on many of its own R&D projects, but many were also pursued in concert with actors like DARPA. For example, in 1983 DARPA approved $6 million to TI to develop compact LISP machine hardware — implementing a LISP processor on a single chip — as long as TI would fund $6 million in simultaneous software research for the device. Projects like the PA were at the top of mind when funding this TI project. Additionally, DARPA had funded much of the early Warp computer research at CMU which the university, later in the 1980s, worked with Intel to help miniaturize in developing the iWarp — whose first prototype was operational around 1990. Since this area of the history of computing progress is extensive and, to some extent, understood by many reading this piece, I will say no more on the progress in computing power in this period. Suffice it to say that the actual progress of the field did turn out to be in line with the lofty expectations of those planning SC as they outlined projects like PA in the early 1980s.

As this computing work was progressing — which would help the PA project team overcome its first major technical risk — SC’s portfolio of work on the second major technical risk, speech recognition systems, was also moving along quite well. While many consider speech recognition systems a relatively modern phenomenon, research in these systems was progressing quite steadily for several different performers in the SC ecosystem in the 1980s. By 1987, CMU had developed a speech system that could recognize 1,000 words with an accuracy of over 90% at real-time speed. BBN had also developed a similar system, and IRUS, which possessed a vocabulary of approximately 4,500 words in 1986 and was generally considered ready for testing in applications like the Navy-relevant Battle Management (the substantially larger vocabulary is because Battle Management’s speech recognition system was meant to be used in less noisy environments). Unsurprisingly, much of the progress occurring in the area was aided by improvements happening in computer hardware — in this period largely due to successes in VLSI development (covered more in the FreakTakes MOSIS piece).

The progress in component technology was happening on the side of the work being done by the teams working directly on the PA project. Extremely pleased with the 1988 demo which showcased the general feasibility and usefulness of the system in practical situations, and with much of the work on relevant component technology in the SC portfolio going according to plan, the Air Force elected to have the Lockheed/Air Force development team begin adjusting their development efforts. They hoped to have a system that could be put into aircraft to help them fly missions within three years. After one more successful November 1989 demo which showcased some additional functionality — such as reasoning using more imperfect sensor data, re-implementing programs in faster programming languages, and updating the machines used to run certain expert systems to faster versions — the Air Force shifted the project into its further development phases. The coming stages were meant to get the machine ready to perform in more battle-like timelines and uncertainty conditions.

Unfortunately, the project would not ever make it across the finish line. The project’s fate seems to have become clear around 1991. Much of the component technology was strong, and some of these component improvements and learnings would find their way into similar future systems. For example, the Lockheed team produced extremely useful new insights when it came to the systems engineering of multiple coordinated expert systems combined in a single application. When the expert systems were combined, there were issues with each expert system following its own objective function and sometimes giving the pilot conflicting advice. The Lockheed team came up with a concept called a plan and goal graph to help the system better infer the pilot’s true wishes and deliver advice that was more coherent and in line with the pilot’s wishes. However, certain vital pieces of functionality were just not coming together the way the team had hoped as they attempted to make the system work in real-world situations. Certain pieces of the project that appeared conceptually feasible in the early stages were proving to be hairier than had been anticipated. The technology base was simply not capable of doing certain key tasks like recognizing certain maneuvers and accurately predicting the geometry that would result. But, in many ways, the program had served its intended purpose in DARPA’s portfolio.

Wright’s chief engineer on the project, Captain Sheila Banks, and its program manager, Major Carl Lizza, seemed to clearly understand that while the PA might not make it into a standard Air Force cockpit, it should not be considered a failure from the point of view of Robert Cooper’s DARPA. The two concluded a 1991 paper they had written on the state of the PA program by writing the following:

In accordance with the program goal to provide a pull on the technology base, the program often needed nonexistent technology to implement the design. Uncertainty-reasoning approaches, pilot modeling techniques, and development tools are areas that lacked sufficient research to implement the Pilot’s Associate vision. Verification and validation of knowledge-based systems constitute another area that is lacking techniques and is quickly becoming a program issue. The actual implementation of a system of this complexity uncovers many gaps in technology still to be addressed by the research community.

The project helped facilitate a level of practical learning in the SC portfolio and provided a level of clarity to many basic researchers trying to understand what contexts, roughly, they were attempting to produce technology for. But the project did not result in any immediate revolution in how Air Force pilots operated in their cockpits.

Battle Management Operations

I will give a slightly more high level accounting of some of Battle Management’s operational details. In many ways, the operational structure of the project was similar to PA’s, but the project resulted in more of a clear-cut win in the short run. Where certain project details are either instructive or interesting, I will be more thorough. But I would rather leave more time for ALV as its messy history and complex legacy are extremely instructive. (For more operational details relating to specific hardware, expert systems, and contractors see this document from Battle Management PM John Flynn.)

The Battle Management application project was launched with the goal of deploying the power of expert systems to help Naval decision-makers make better inferences about enemy forces, keep better tabs on their own forces, generate strike options, come up with operations plans, as well as a variety of other tasks — which any modern reader surely knows computers could assist Naval leadership in carrying out. The vision of the system was quite similar to the PA, but in the context of the Navy rather than the Air Force. As was the case with the PA system, the Battle Management system’s wish list of capabilities would likely require improvements in overall computing power from the rest of the technology base. Initial estimates for the computing power required for the system were around 10 billion operations per second — approximately a one or two order of magnitude increase in peak performance in comparison to what was available at the start of the program.

While Battle Management was initially conceptualized as one program in SC’s founding document, it quickly became three separate programs: Fleet Command Center Battle Management (FCCBM), Carrier Battle Group Battle Management (CBGBM), and AirLand Battle Management. In this section, I’ll focus on the two projects that focused on the Navy as a customer — FCCBM and CBGBM — for brevity’s sake. Both systems, similar to PA, were meant to use a GUI, natural language system, and expert systems to help assist Naval commanders in coming up with and executing operational strategies on the ship level (CBGBM) as well as on the theater level (FCCBM).

CBGBM — the system that would be installed aboard a ship — was considered a continuation of a program by Allen Newell and others at CMU that had been ongoing since the 1970s. With the announcement of SC, CMU had proposed continuing the work area under the well-funded SC umbrella. DARPA believed this was a good idea and helped coordinate Naval buy-in to the project. The Naval Ocean Systems Center in San Diego officially bought-in to the CBGBM project early on. Similarly, for FCCBM — the fleet level battle manager — DARPA was able to secure buy-in from the HQ of the Pacific Fleet at Pearl Harbor. As the Air Force did with PA, the Navy agreed to provide a level of financial support to these projects at this stage. With that support DARPA had early buy-in from its customer into the project. DARPA’s working relationship with the Navy on the project would prove to be an exceptionally functional one — enabling a far more effective prototyping approach than was seen in the PA project.

The FCCBM’s (the system meant to help make plans on the fleet level) rapid prototyping strategy, implemented under PM Al Brandenstein, was executed in coordination with the team at Pearl Harbor. Roland and Shiman describe the project’s prototyping strategy as follows:

A test bed was established at CINCPACFLT [Pearl Harbor] in a room near the operational headquarters. The same data that came into headquarters were fed into the test bed, which was carefully isolated so that it could not accidentally affect real-world activities. Experienced Navy officers and technicians tested it under operational conditions and provided feedback to the developers. The resulting upgrades would improve the knowledge base and interface of the system.

This extremely tight customer relationship in the development process ensured several things in the early stages of the project: faster feedback cycles, increased buy-in from the customer, and extreme clarity on the requirements of the system.

As the implementation stages of the projects got underway, the FCCBM team installed its computer hardware at Pearl Harbor in 1985. By March 1986, the test bed environment had been completed. With that infrastructure and scaffolding in place, the expert systems contractors could ensure their systems were tested on data with the highest level of fidelity to real-world data possible — because it was real world data. In June 1986, the Force Requirements Expert System — the first of the five expert systems for the project — which had been in development since 1984, was installed and began testing in the test bed. By the end of August the system was successfully demoed to DARPA and Naval leadership. This demo, as well as much of the work on the project, was heavily based on the development of BBN and the Naval Ocean Systems Center’s IRUS natural language generator — a project from DARPA’s technology base. By this time in 1986, IRUS had already achieved a vocabulary of almost 5,000 words and — being an experimental system developed with the knowledge that the Navy would be its initial user — even had a vocabulary and language generation style that could comprehend and respond to queries in language familiar to Naval operations staff.

By 1987, the FCCBM had already proved its worth to Navy staff. The system was capable of performing tasks that used to take fifteen hours in as little as 90 minutes. Research teams frequently seem to generate results like this on paper that either don’t turn out to be true in practice, or, alternatively, are never installed due to fear from customers that the paper results won’t hold up in deployment. FCCBM was able to avoid both of these issues. Since the system was already installed at Pearl Harbor and working on its actual data streams, the results were far from mere paper results. Additionally, the pain and annoyance of initial installation were much less of a factor in the decision than they might usually be because the system was already installed at Pearl Harbor. The first expert system quickly went into operation helping monitor ships in the Pacific while some of the other expert systems — which were further behind in readiness level — were tested until they were deployment ready.

Following a similar approach, CBGBM (the Battle Management system designed to be put on individual ships) was installed for testing on a ship in early 1986. Within a few months, the use of the machine in fleet exercises convinced the Navy that a modified version of the system would be as useful as they’d hoped. Improvements were made to the system before going out on a six-month tour later that year. The technology, at that point, was very much still in the prototyping stage because it could not operate in real time. But as a development project, CBGBM was proving its utility to the Navy.

While these two projects generally progressed quite smoothly, there was one notable friction on the operations side of the project: classified information. Some individuals who worked with the contractors did not have security clearances because they couldn’t get them. Others just didn’t want them. The university research contractors, in particular, often didn’t want clearances. CMU, as an example, was one of the systems integrators for the CBGBM project and had a very good working relationship with the Navy, but largely did not have security clearances. So, this meant that one of the main systems integrators could not — for the most part — actually look at any of the golden, real-world data in the database at Pearl Harbor. Instead, their workflow had to look something like the following: discuss with the Navy what sort of information would be reasonable to use, do their R&D using fabricated data that they hoped would look like the real thing, and twice a year hand over their updated system to the Navy team and wait to see how it worked. While that was a hassle, it seemed to be a small price to pay for having the best minds on the project — the CMU team’s work from campus seemed to port over to the real systems on the ships well enough. So, the parties made it work. The Navy would even send staffers to CMU for long stays to ensure that as much knowledge exchange as possible was done.

The work on the Battle Management applications, similar to PA, was enabled by the development work going on in the greater SC portfolio. This is particularly true of the following areas of the portfolio: computer hardware, natural language understanding, and speech recognition. Beyond the improvements in computing hardware going on in the broader SC portfolio — which was explored in the PA section and had similar implications to the Battle Management projects — much of the speech recognition and natural language understanding (NLU) research in the SC portfolio was done explicitly with Battle Management in mind.

I will provide some clarity as to what some of this work looked like — which I will also cover more in a coming piece on DARPA workhorse computing contractor BBN — since several researchers and ex-DARPA PMs have personally expressed surprise that DARPA’s speech recognition and NLU portfolio has operational successes going back four decades. To many, this area of engineering feels quite young since many of the best methods in use today have been enabled by quite recent technology. However, as far back as the late 1970s, contractors in the speech recognition and NLU areas of the DARPA portfolio were building quite impressive applications with what — by today’s standards — would be considered somewhat quaint computing power and natural language methods.

While DARPA had largely reduced the contracts it let in speech recognition in the mid-1970s under Director Heilmeier, some speech recognition work continued in this era that would prove key to programs like BBN’s IRUS system that was deployed in the Battle Management system in the late 1980s. Several key pieces of work going on in the interim were: Baum et al.’s continued work developing hidden Markov models (HMM) at the Institute for Defense Analysis, Jim Baker’s HMM-enabled Dragon speech recognition system built at CMU, and BBN’s applied work on various speech recognition and compression projects for paying customers. On the natural language understanding front, BBNers and researchers related to BBN — such as the Harvard and MIT grad students of professors who worked part-time at BBN — made new discoveries and first-of-their-kind implementations in augmented transition networks and structured inherited networks in this period. Both of these technologies — which seem to have been replaced by more advanced methods by the late 1990s — proved extremely useful in BBN’s IRUS system which proved the feasibility of certain natural language tasks in the context of applications like Battle Management.

Even while DARPA was not giving contracts for speech recognition, believers in the technology at frequent DARPA contractors like MIT, CMU, and BBN that believed in the technology continued to push the field forward in the meantime. By 1986, the IRUS system already had a speech recognition vocabulary of almost 5,000 words and an accuracy rate of over 90% which was enabled by some of BBN’s work on alternative models. The relatively massive size of IRUS’s vocabulary — in comparison to the vocabulary of under 100 words in the PA’s speech recognition system — was largely due to the noisy conditions of Air Force cockpits. This constraint limited the scope of the PA’s vocabulary to simple vocabs and something like fifty commands along the lines of “Fire!.” In contrast, DARPA knew from the beginning that Battle Management would be a more conducive environment for speech recognition systems. So, from the beginning, SC management had ambitions of building a system capable of utilizing a vocabulary in the 1,000 to 10,000-word range in the next five years or so.

As the battle management application projects were ongoing, there were eight different contractors across nine projects in the broader DARPA ecosystem pursuing various research projects in the area of speech recognition. To help wrangle all of these findings into technology that could be folded into future generations of battle management systems, CMU was given a contract as an “integrator.”

In the SC portfolio, integrators were sometimes given separate contracts and tasked with the primary responsibility of finding ways to integrate the cutting-edge findings from the performers’ work in the broader technology base — who were supposed to be keeping applications in mind anyway — into the final applications. An integrator contract was given in the PA project as well. In that project, five groups of performers who were working on eight different DARPA programs fed relevant results to TI, who was tasked with integrating the new knowledge into subsequent generations of the PA. In theory, with prime contractors like Lockheed and university researchers doing their jobs, this role should not be necessary. But in practice, the integrators could often make a big difference in the level of ambition of the integration work.

In the end, the speech recognition work went so well that SC management never needed to change the initial, lofty goal of achieving a system with a vocabulary of roughly 10,000 words. And that’s saying something; oftentimes, lofty goals are set at the beginning of projects like this with the understanding that, after a period, the PM, relevant performers, and DARPA management will touch base and agree on more reasonable goals. However, revising the goals was not necessary in this case.

The other area of battle management’s language work — NLU — also had integrators that it leaned on. The broader NLU applications work was made up of two integrators and five other teams of performers. The integrators were both teams. The first team, in charge of integrating text understanding, was made up of NYU researchers and the Systems Development Corporation — a frequent DARPA contractor since its systems engineering work on the SAGE air defense system. The second team, in charge of integrating broader work in natural language query processing, was made up of BBN and the Information Sciences Institute (who is the star of the FreakTakes piece on MOSIS). This natural language understanding work also met the initial, non-quantitative goals that SC’s planners set out: the final users were able to provide the system new information and get understandable feedback in return explaining the system’s “thought process.”

The Naval Battle Management projects clearly accomplished their short term goals. The primary goal of the program, on paper, had been to help the Navy with supporting and planning in areas where time constraints are extremely critical. And the systems did just that. For some important tasks done at locations like Pearl Harbor, they reduced the time it took to do certain planning tasks from 15 hours to 90 minutes — along with the increase in computational reliability that comes with using a computer. Additionally, these Battle Management systems were in place and working within five years. This not only meant that the projects were a success as quickly as possible, but also that the projects helped DARPA Directors in doing things like justifying budgets and convincing increasingly austere Washington decision-makers in the late 1980s that their work was saving money for the taxpayers even if it often looked somewhat speculative. In many ways, Battle Management was the PR success that the ALV was hoped to be.

Autonomous Land Vehicle Operations

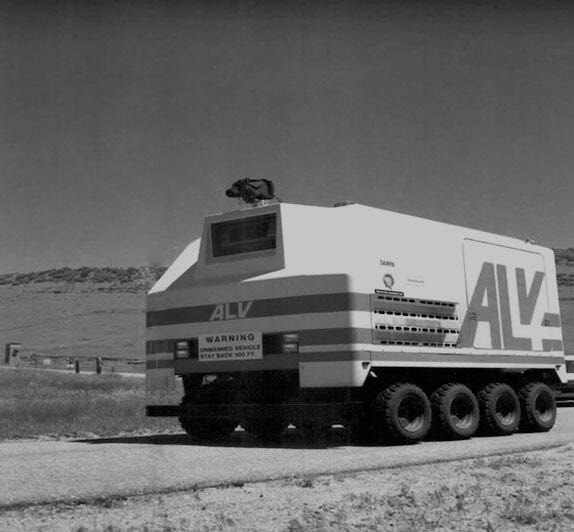

The third application placed at the heart of SC’s founding document was the Autonomous Land Vehicle (ALV). As the SC program wore on, ALV would also come to be one of SC’s biggest disappointments — at least in the short term. The ALV was envisioned as an autonomous recon vehicle that could navigate battlefields — obstacles and all — to conduct reconnaissance missions and report its findings back to soldiers who did not have to leave safer positions to obtain the information.

From a mechanical perspective, DARPA hoped for a machine that could travel over thirty miles in a single trip with top speeds of almost forty miles per hour. Of course, machinery existed that could mechanically hit these benchmarks. However, DARPA wanted a machine that could do all of this while being autonomously operated. To do this, many computational abilities would need to be developed for the system. The computational tasks required for an ALV to successfully work in battle included: route planning, navigating via visual analysis of physical landmarks, rerouting and avoiding surprising obstacles that were not in the route plan, ingesting useful information on the target, and transmitting that information to a military team. At the time, it was believed that performing these tasks would require an expert system that could run as many as three times more rules per second than current expert systems. Additionally, DARPA believed that the computer required to run the machine would not just need to be small — something like 4 ft x 4ft x 4ft — but would also need to be powerful enough to perform ten to one hundred billion von Neumann instructions per second. This was a tall task, as contemporary von Neumann machines were generally limited to around thirty or forty million instructions per second. This need for a three-order-of-magnitude improvement in computing meant that the ALV project achieving its goals relied even more strongly on improvements in computing power than the PA and Battle Management.

The project sought to tackle problems in computer vision that modern autonomous vehicle research groups are still working through today. However, Clinton Kelly was confident that enough leg work had been done on the research front for DARPA to begin creating a program as a technology pull to bring DARPA’s vision work to practicality. This confidence derived in no small part from DARPA’s Image Understanding program which had kicked off in the mid-1970s. The Image Understanding (IU) program — sponsored by IPTO and then-Director J.C.R. Licklider — was formally spun up as a cohesive program on the heels of progress in DARPA-funded projects like DENDRAL in the 1960s.

DENDRAL was not computer vision per se — in that it could not actually ingest a photo — but the system could utilize a text representation of some molecular structures and used expert systems to perform analyses on them. Projects like this demonstrated that, once information taken in visually can be coded symbolically, systems were in progress that could make intelligent use of the information. Somewhat naturally, “signal-to-symbol mapping” became a key point of emphasis in the IU program — which would allow visual information from cameras or sensors to be encoded in such a way that systems could act intelligently on the information. Somewhat surprisingly, there were even some practical successes from the early-IU years. One example was DARPA and the Defense Mapping Agency’s (DMA) joint funding of a system — housed at the Stanford Research Institute — to automate certain cartographic feature analysis and assist the DMA in certain mapping and charting functions. However, most of the work done by the performers in IU in this period was quite far from applications largely because the field was young and there were not many clear-cut applications for the work just yet. However, it should be noted that as IU was getting underway in 1975, Licklider and others hoped for the work to progress in such a way that applications were within reach by 1980.

Even with the ALV project kicking off in 1985, ten years after the start of IU, many veteran vision researchers felt this push for an ALV-type application might be a bit premature. Kelly and Ronald Ohlander — the DARPA PM who oversaw vision-related projects in this era — polled some veteran IU researchers to ask what they felt would be reasonable benchmarks and timelines for the project. Kelly told Roland and Shiman in an interview that he felt the answers were far too conservative and that he “took those numbers…and sort of added twenty percent to them.” When the researchers pushed back, they settled on a compromise. Ohlander noted to Roland and Shiman that he thought the program to be “a real stretch” — far more than the other applications.

This general sentiment could also be seen with ALV’s lack of early buy-in from its intended customer: the Army. The Army was the intended customer for the ALV, but Army leadership saw no acute need for a robotic vehicle at the time and, thus, did not commit to helping fund its early development. The project, however, was seen by Kelly — and likely Cooper — as a fantastic pull mechanism for DARPA’s image understanding work. DARPA — as it did with other projects in this period such as the ILLIAC IV and Connection Machine — was hoping that providing funding and a contract for a machine with clear goals that required improvements to its component technology could help bring a young area of research to a new level. As Richard Van Atta wrote in one of his technical histories of DARPA:

Before the ALV project, the vision program had centered on defining the terms and building a lexicon to discuss the mechanics of vision and image understanding. The ALV was one of the first real tests of IU techniques in practice.

Even if the technology proved to be out of reach of his generation of researchers, Ohlander acknowledged that the researchers’ work deploying their ideas on a real-world test bed would expose the shortcomings of their approaches and help move the field along faster than it would otherwise.

With the kick-off of SC, funding for all vision-related work in the SC portfolio expanded rapidly. IU’s budget abruptly increased from its usual $2-$3 million a year to $8-$9 million a year. The ALV application program itself was, to some extent, co-managed by Clinton Kelly as well as its official PM William Isler. They planned for one prime contractor to be in charge of engineering the vehicle and gradually incorporating the new component technology from the technology base into the machine on an ongoing basis. The program managers felt that one of the major aerospace companies from the regular defense contracting pool would be a good fit for the project. While these groups did not have experience in computer vision — almost nobody did — they did have experience overseeing projects on this scale of complexity and had all proven quite adept at large-scale systems integration. Some of these companies, at least, had experience with machines like remotely operated vehicles and basic forms of AI systems. As they were submitting proposals to DARPA’s January 1984 qualified sources sought, these aerospace companies understood that the project would be carried forward with whichever one of them won in partnership with at least one university department. The partnership was intended to help promote the integration of the expanding capabilities of the DARPA technology base into prototypes of the ALV as quickly as possible.

The ultimate winner was Martin Marietta — which would later become the “Martin” in Lockheed Martin.1 The contract was to last 42 months and was for $10.6 million — with an optional extension of $6 million for an additional 24 months. DARPA chose the University of Maryland (UMD) as the university research partner to assist the company in folding technology improvements from the technology base into the machine in the early stages of the project. UMD was a well-known entity to the AI portion of DARPA and its PMs as it was one of the primary university research centers contributing to IPTO’s Image Understanding work. So, the university’s primary task was to get the practical vision system that they had been working on folded into the machine so the Martin team could begin working towards the goals of its demos as early as possible.

As implementation work got underway, the ALV quickly adopted an accelerated schedule. Towards the end of 1984, DARPA moved up the date of the machine’s first demonstration to May of 1985 — six months before the previous initial demonstration. The vehicle would have to identify and follow a basic road in a demo with no obstacles of note. As Martin Marietta rushed to quickly assemble its first vehicle, it largely relied on off-the-shelf components that were tried and true. The mechanical parts such as the chassis and engine were standard, commercially available parts. The software and hardware for the machine’s computing were not exactly cutting edge. One of the two types of computers chosen — a VICOM image processor — may have been chosen because one of the University of Maryland Researchers had extensive experience working with the machine and stood the best chance of quickly getting the vision program up and running with familiar equipment. The second type of computer — Intel’s single-board computers, which were more or less personal computers — was used to control the vehicle itself. The camera that fed into the vision system was a color video camera mounted at the top of the vehicle and paired with several charge-coupled devices to transmit the visual information to the computer in a usable format.

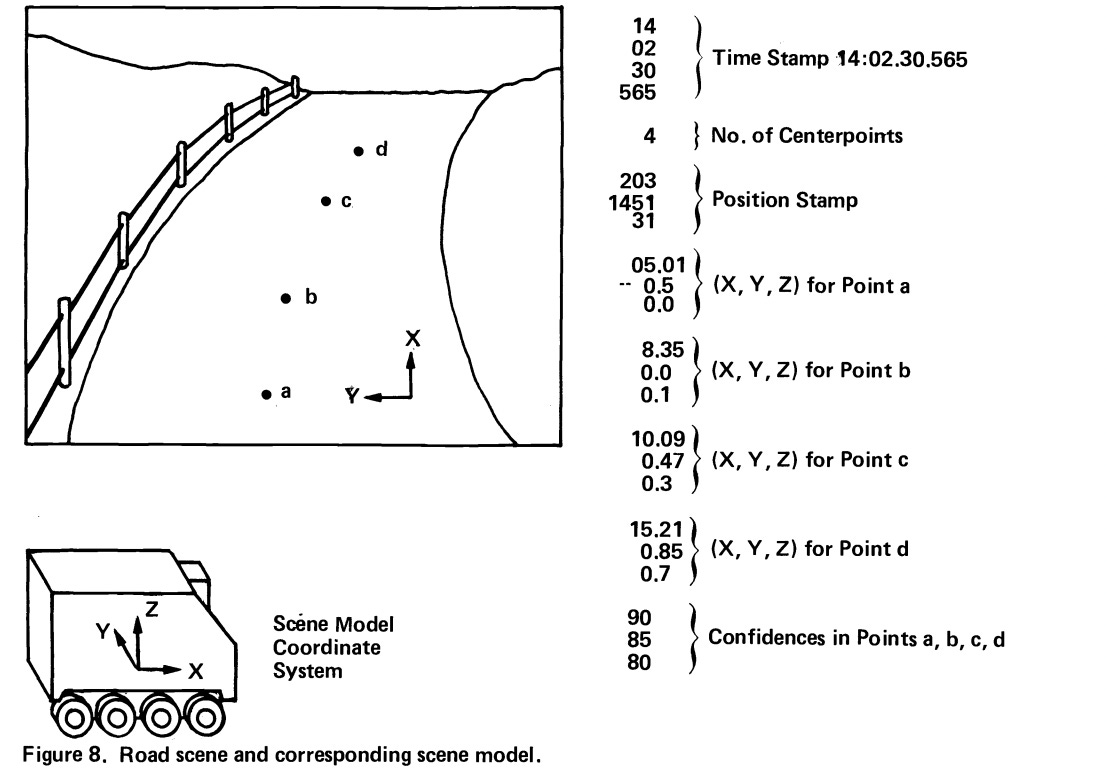

The first demo was quite basic. The goal was for the machine to follow the center line of a road at Martin Marietta’s testing facility for about 1,000 meters. To give the reader an idea of how the vision system encoded real-world image data in a usable way, please see the following figure provided in a 1985 Martin Marietta report explaining what a digital scene model would look like for a given road image.

With basic image processing, some engineering cleverness, and standard geometry for calculating things like distance traveled and curve angles for steering, the machine seemed like it might be able to navigate a basic course quite well. The machine was powered by two major categories of visual decision-making software. The first category was the software dedicated to understanding exactly what the ALV’s camera was seeing. This software processed and enhanced the images so it could then use some of UMD’s edge-tracking algorithms to find the various boundaries in the image. Using these boundaries, the software calculated where the center line seemed to be. Another special algorithm would attempt to plot a 3D grid.

This work was passed to the second software system which was meant to perform some basic reasoning tasks using the current 3D grid model as well as the 3D grids from the timestamps immediately preceding the current one. At this stage of the process, the reasoning system largely attempted to assess the reliability of the current image, given what it had seen in the previous images. If the image seemed unreliable, the system attempted to make up a more believable path that fit with the images seen previously. If this switch happened, in that moment the reasoning system was seen as driving the vehicle rather than the vision system — since the vehicle was operating off of a pre-stored model rather than the current, low-confidence model built by the vision system. This backup plan was necessary for achieving the goals set by the demo because, given the slow processing speeds, the vehicle’s speed would often outrun its visual processing. So, this was necessary to keep the vehicle moving without stopping too frequently or going too slowly.

The first demo — a standard road-following task — went off rather successfully. The vehicle traveled the approximately 1,000 meter course in about 1,000 seconds and did so traveling about 85% of the way under vision control rather than reasoning control. The machine did this while generating over 200 scene models and trajectories to help inform the vehicle along the way. On the heels of this success, the Martin and UMD team were right back to work because the next demonstration was in six months. And this demonstration was a test that was considered less of a layup than the first one had been. The course for the demonstration — which the ALV had to navigate at 10 km/h — required that the ALV navigate a sharp curve at least 3 km/h, progress along several straight stretches of road, and, lastly, stop at a T intersection to turn around and repeat the course. It had to do all of this without the reasoning system overriding the vision system.

To do this, the team would have to add more sensor information than just the single video camera to take in all the required information. Additionally, they had to re-work the vision software to somehow make use of all of this new information. In attempting to navigate this much harder problem, Martin relied on a DARPA-funded laser range finder that had been developed by the Environmental Research Institute of Michigan. The device could be attached to the front of the vehicle and was used to scan — up and down as well as side to side — the terrain in front of the vehicle to identify obstacles and other important features. Those working on the software attempted to find a way to combine this knowledge into a single 3D model of the scene. This knowledge was combined with the reasoning system which now contained a digital map of the track itself which the machine could use as a reference — the Engineering Topographical Laboratory had developed the map. The vehicle, impressively, passed this second test as well in November 1985. At this point, the ALV application seemed to be making extremely satisfactory progress. The technology the ALV used in these early demos — including the laser range finders — was not exactly cutting-edge, but the machine was hitting its practical benchmarks and hopefully could begin subbing in more cutting-edge component technology as the demonstration benchmarks continually raised the bar for the ALV.

While the work on these early demos was happening at Martin Marietta, Martin and other ALV-related component contractors were also holding meetings at regular intervals — often quarterly or as frequently as monthly — discussing project needs, developments in the technology base, and how to delegate tasks. These conversations with research teams working on broader vision work were particularly important for work on the demos scheduled to happen in later-1986 and beyond — when it seemed like Martin would begin having acute needs to integrate more work from the technology base into the ALV to meet the goals of the demos. Much of what went on at these meetings was not so different from what happens at many DARPA PI meetings — with the standard presentations of progress and teams getting on the same page.

One logistical point of particular note was that, as some of the higher level planning meetings played out, the project structure was almost very heavily shaped based on the arbitrary preferences of the component contractors. The contractors who found themselves working on the reasoning system vs. the vision system had very different interests and incentives. Most of the reasoning contractors were commercial firms and had the incentive to fight for a broader scope of work and more tasks getting assigned to the reasoning system because that meant better financial returns to the company. Most of the vision contractors, such as the University of Maryland, were academics and largely content to let that happen — or, rather, not as committed to fighting for a broader scope of work as the companies — if it meant they got to focus primarily on specific vision sub-tasks that were more in line with the size and scope of projects academics often undertook. For example, the private companies felt that building up much of the systems mapping capabilities, knowledge base, and systems-level work should fall to them.

CMU and one of its project leaders — Chuck Thorpe — seemed to be the strong voice of dissent on many of these matters from the academic community. Ever since CMU had become a major contractor in DARPA’s Image Understanding work, it had taken a rather systems-level approach to attacking vision problems — working with civil and mechanical engineering professors like Red Whittaker to implement their vision work in robotic vehicles — rather than primarily focusing on the component level. The CMU team far preferred this approach to things like paper studies — in spite of the increased difficulty. So, as the project progressed and these conversations were ongoing, both sides had at least one party fighting to work on component work as well as some amount of integration work prior to Martin’s work integrating components in the last mile — often right before a demo.

While that future planning was taking place in the background, it was becoming clear that Martin Marietta’s pace of actual technical progress was not as positive as the demos had been. Their work implementing basic vision algorithms proved finicky as small changes like the lighting, road conditions, and shadows caused the vehicle to behave very differently from one day to the next. Each new software program or change seemed to cause a new problem which took time to debug. It was messy work. Additionally, to the dismay of Martin with its strict demo timelines, it began to seem like a large number of specific vision algorithms might be required to handle different, niche situations such as even conditions, uneven conditions, etc. This would take significantly more work than if one unified vision approach could cover most situations — as the team had initially hoped.

Meanwhile, demo timelines began to get tighter and tighter. More demo-like activities kept being added as more PR was brought to the program by Clinton Kelly — news releases were issued, the press was invited to watch some of the vehicle’s runs, etc. In the midst of all this demo pressure, the ALV project began to embody more of a negative “demo or die” principle than embodying a pull mechanism to effectively move the technology base forward. As Martin’s actual progress slowed and the demo schedule tightened, the company began to focus only on achieving the benchmarks of the next demonstration at the expense of developing truly useful, new technology. For example, for one of the demonstrations, the obstacle avoidance algorithm — put together by one of the component technology contractor companies — worked in such a narrow set of circumstances that it could not even be used successfully in a parking lot, but it could work on the roads the demonstration took place on.

As Martin was rushed in these middle years of the program, the ALV was clearly not acting as the test bed for the SCVision projects — the name encompassing much of the vision work in PM Ronald Ohlander’s AI portfolio — that it was meant to be. Much of Martin’s staff time and equipment was dedicated to hitting the next demo and not finding adequate time to serve this more future-looking function. It does seem that the team was truly busy and not just blowing the researchers off. By April of 1986, the 1.5-year-old ALV had logged 100,000 miles and needed to have its engine replaced since it had worn out. In one two-month period of testing prior to a demo, 400 separate tests were conducted using the machine. Preparations for a subsequent demo might begin as much as six months before — often not long after the last demonstration had just finished. In essence, things were becoming very crunched at Martin.

In 1985, in the period between the first and second ALV demonstrations, Ronald Ohlander — a former student of Raj Reddy at CMU — decided it might be necessary to rely on a contractor separate from Martin Marietta to integrate the component technology improvements from the technology base more ambitiously. Ohlander wanted to issue a contract for a scaled-down version of the ALV operating from within the SCVision program. The integration contractor in charge of the machine would be in charge of testing the computer vision algorithms of other research teams that fell under the SCVision umbrella.

Ohlander, in doing this, adeptly observed that the prime contractor on the ALV project might be under pressure to meet its contract milestones and not be sufficiently motivated to fold the most cutting-edge component technology coming out of his SCVision program into the ALV. In addition, he seems to have felt a level of unease relying on a machine, contractor, and program management from a different DARPA office — Isler and Kelly largely operated out of the Engineering Applications Office — to demonstrate the usefulness of the investments coming out of his own office — IPTO. Ohlander explained the decision to Roland and Shiman in an interview, stating:

It wasn’t to outshine them or anything like that…but we were…used to doing things in universities with very little money and getting sometimes some startling results. So I…had a hankering to just see what a university could do with a lot less money.

In the end, the contract would go to Carnegie Mellon. CMU had already, unlike most of the component contractors in the SCVision portfolio, been testing its component technology on its own autonomous vehicle: the Terregator. CMU’s used the Terregator — for often slow and halting runs — to test the algorithms developed for its road-following work. The Terregator proved extremely useful to this research, but its use had also helped the CMU team learn enough to outgrow the machine.

In CMU’s ongoing efforts to implement sensor information from a video camera, a laser scanner, and sonar into one workable vehicle, the CMU researchers had come to realize that they needed funding for a new vehicle. CMU needed a vehicle that could not just accommodate the growing amount of sensor equipment, but also one that could carry out its tests with computers and grad students either on board or able to travel alongside the vehicle. This would allow bugs to be fixed and iterations between ideas to happen much faster. The vehicle that the CMU computer vision and robotics community was casting about trying to get funding for would come to be known as the NavLab. Fortunately, what they were seeking money for seemed to be exactly what Ohlander felt the SCVision portfolio needed. The funding for the NavLab began around early 1986. The funding set aside for the first two vehicles was $1.2 million, and it was estimated that any additional NavLab vehicles would cost around $265,000.

As Martin was somewhat frantically taken up with its demo schedule, CMU, with its longer time windows, was free to focus on untested technology as well as technology that ran quite slowly but seemed promising in terms of building more accurate models of its environment. As the CMU people saw it, if anything proved promising they could upgrade the computing machine used to operate that piece of the system later on. The NavLab team was working closely with CMU’s architecture team that was developing Warp systolic array machines from within Squires’ computer architectures portfolio. The CMU architecture team was developing its equipment with vision applications in mind as an early use case. As a result, they were happy to change the order of functionality they implemented in order to account for the acute needs of the SCVision team. Additionally, if a piece of vision technology proved promising to the NavLab that could not be sufficiently improved with the immediate help of a Warp or some other new-age computing machine, the vision researchers likely figured that a piece of hardware powerful enough would likely exist soon to help push the idea along — even if not in the next year. Lacking the hard metrics of yearly demos with speed requirements loosely matching how fast the vehicle would need to go in the field, the CMU team could afford to think more along the lines of “how do we build a machine that is as accurate as possible, even if it moves really slowly today?”

As CMU took up the mantle of building SCVision’s true test bed vehicles, it also took over the role of SCVision portfolio’s true “integrator” for Martin’s ALV. Carnegie submitted a proposal to carry out this integration work along with building the NavLab. From a high level — in spite of neither the ALV nor SCVision projects having set aside money for an integration contractor — this made sense. Martin was meant to integrate work from the technology base on its own, but around mid-1985, it was becoming clear that they were not doing an effective job on their own. Academic departments were often close to the evolving frontier of their own field, but often did not have the project management skills and scaffolding to do great integration work. CMU was somewhat exceptional in that it did this sort of thing quite frequently. Clinton Kelly, while apparently not thrilled about the idea since he was a massive supporter of the ALV project, agreed to the arrangement.

As 1986 went on, Martin Marietta was able to overcome issues with its vision system well enough to continue passing its demos which required the machine to go on larger tracks at faster speeds — such as 20 km/h on a 10km track with intersections and some obstacles. As 1987 approached, plans were being made to upgrade the computing equipment in the machine — some of the new computers were developed in Squires’ architectures portfolio. Additionally, as the demos in the coming years were slated to take a marked step up in terms of the complexity of their missions and terrains, the Martin team’s relations with CMU as the new integration contractor — a bit cold at first — warmed considerably.

Personnel from both orgs kept in regular touch and visited each other’s sites. Martin even went as far as to enroll a member of its staff as a grad student under Chuck Thorpe to serve as a liaison between the two. In mid-1986, CMU began helping Martin install software for map planning, obstacle detection, sensor calibration, and new image recognition functions that it had developed and integrated on its own machines on campus. This period also saw specialized tools developed so CMU could more easily run its code without alterations on Martin’s machines — which ideally would have been done earlier in the project, but better late than never. The machine was steadily improving. In the summer of 1987, when one of the private sector contractors — Hughes — was at Martin’s Denver testing facility to test its planning and obstacle avoidance software, the ALV made its first extended drive in country conditions on a route it had selected for itself using only map data and sensor inputs. As Roland and Shiman wrote of the rather triumphant run:

The vehicle successfully drove around gullies, bushes, rock outcrops, and steep slopes.

However, that mid-1987 period would prove to be the high water mark of the ALV application program. After the ALV’s November 1987 demo, a panel of DARPA officials and technology base researchers met to discuss phase two of the program. Roland and Shiman described the titanic shift in how the ALV project and the SCVision portfolio operated in the wake of that meeting as follows:

Takeo Kanade of CMU, while lauding Martin’s efforts, criticized the program as “too much demo-driven.” The demonstration requirements were independent of the actual state-of-the-art in the technology base, he argued. “Instead of integrating the technologies developed in the SC tech base, a large portion of Martin Marietta’s effort is spent ‘shopping’ for existing techniques which can be put together just for the sake of a demonstration.” Based on the recommendations of the panel, DARPA quietly abandoned the milestones and ended the ALV’s development program. For phase II, Martin was to maintain the vehicle as a “national test bed” for the vision community, a very expensive hand servant for the researchers.

For all practical purposes, therefore, the ambitious ALV program ended in the fall of 1987, only three years after it had begun. When phase II began the following March, Martin dutifully encouraged the researchers to make use of the test bed but attracted few takers. The researchers, many of whom were not particularly concerned about the “real-world” application of their technology, showed more interest in gathering images from ALV’s sensors that they could take back to their labs.

The cost of the test bed became very difficult to justify. Phase I of the program had cost over $13 million, not a large sum by defense procurement standards, perhaps, but large by the standards of computer research. Even with the reduced level of effort during phase II, the test bed alone would cost $3–4 million per year, while associated planning, vision, and sensor support projects added another $2 million. Perhaps most disappointingly for Kelly, the army showed little interest in the program, not yet having any requirement for robotic combat vehicles. One officer, who completely misunderstood the concept of the ALV program, complained that the vehicle was militarily useless: huge, slow, and painted white, it would be too easy a target on the battlefield. In April 1988, only days after Kelly left the agency, DARPA canceled the program. Martin stopped work that winter.

Autonomous Land Vehicle Results

The Autonomous Land Vehicle hit its agreed upon, quantitative benchmarks in its early demonstrations. It also incorporated many technologies from the SC technology base into successive iterations of the ALV such as BBN’s Butterfly processor, CMU’s Warp, and other vision technologies that CMU and others had helped develop. If the Army had been excited by the far-off sounding application and the ALV’s progress in the early demos, there would likely have been a much higher chance that the program continued on schedule. However, since the Army was not yet interested in autonomous recon vehicles, the ALV’s biggest service to DARPA and its portfolio was as a technology pull mechanism to help move its technology base forward in a coordinated fashion. And since Martin Marietta had proven rather lackluster in the integration department, DARPA’s decision in late 1987 to wind down the ALV project and, instead, put more emphasis on the NavLab makes sense.

Work in the spirit of the ALV largely lived on in CMU’s NavLab efforts. CMU’s somewhat unique setup — running an engineering research program equipped with de-facto program managers from within an academic department — proved extremely effective in carrying out this project. With the organization dedicated to attacking an extremely concrete goal like autonomous driving, the CMU team was able to keep a clarity of vision throughout the project — while maintaining an organized structure that was less strict than a company but more organized than a traditional academic department. CMU would go on to make very noteworthy contributions in the years since the ALV was wound down. In an IEEE oral history of CMU NavLab program manager Chuck Thorpe, Thorpe describes the steady practical progress of the NavLab team, comparing it to his newborn son, saying:

My son was born in ’86, and we had contests to see when NavLab 1 was moving at a crawling speed, my son was moving at a crawling speed. When NavLab 1 picked up speed, my son was walking and learning to run. NavLab 1 got going a little faster, my son got a tricycle. I thought it was going to be a 16-year contest to see who was going to drive the Pennsylvania Turnpike first, because they were each progressing at about the same speed.

Even without the consistent proof of progress demos such as those that drove the ALV project, steady progress was being made by the interdisciplinary, practically-minded, academic CMU team. But the team’s progress very much received one of those unexpected jolts of progress that groups of researchers diving down riskier rabbit holes with some regularity hope to experience. One of CMU’s grad students working on the project, Dean Pomerleau, published a 1988 paper on a system called ALVINN that would be a major step in showing us the future of the field. Thorpe finished the paragraph he started comparing the NavLab and its steady progress to his son, recounting:

…I thought it was going to be a 16-year contest to see who was going to drive the Pennsylvania Turnpike first, because they were each progressing at about the same speed. Unfortunately, this really smart graduate student named Dean Pomerleau came along and built a neural network technique, which managed to be the most efficient use of the computing researchers that we had, and the most innovative use of neural nets at the time, so in 1990, he was ready to go out and drive the Pennsylvania Turnpike.

Thorpe then recounted how, by 1995, a later version of the NavLab running on a newer vision system, RALPH, took things even further. RALPH’s approach — which Pomerleau also came up with — combined pieces of ALVINN’s neural net approach with a new model-based approach which was similar in spirit to simpler approaches used in the ALV. Using RALPH’s vision system, the NavLab was taken by Pomerleau and another student from D.C. to San Diego, driving 98% of the way autonomously. Things like “new asphalt in Kansas, at night, with no stripes painted on it,” flummoxed the machine. But it was plain for all to see: the technology was taking massive steps.

Lessons Learned (and Caveats) From All Three Programs

The legacy of the ALV project is more complicated than that of PA and Battle Management, but it may have done more good in shaping the technology base than the other two projects combined. All three projects had their bright spots as well as their weaknesses.

In terms of customer relationships, PA and Battle Management had significantly better relationships with their armed services customers than the ALV had with the Army. Battle Management’s practical success in terms of positive impact on its customer, the Navy, seems to have outdone the practical success of the PA. But it is hard to make that out to be solely the fault of the PA or its management team. After all, in many situations in ARPA-like orgs, you simply get the customer you get.

That being said, Battle Management certainly provides a great exemplar of how to — with the right customer — integrate a test bed as close to the production process as possible to minimize the number of annoyances that can arise when translating technology built in lab environments to real-world environments. This strategy from the Battle Management team also helped mitigate the organizational/logistical hold-ups that often result when looking to implement technology from a remote test bed.

The PA, in spite of its lack of success in pushing a truly useful product out of the program, proved to be a somewhat successful pull mechanism for the speech recognition and natural language understanding technology base. Let’s remember, to many, it was not crystal clear that speech recognition and NLU research was ready for translation into products. The PA application and associated funding on related problems in the technology base helped harness the efforts of DARPA’s performers to work on common problems. This, in the end, helped push certain areas of research into practice. In other cases, the PA highlighted areas that the field needed to work on.

The ALV, in contrast to these two projects, never had the customer relationship of PA and Battle Management. Not even close, really. The Army did not want the ALV; the Air Force wanted a working PA and the Navy was extremely enthusiastic about Battle Management. However, those at DARPA understood that the Army was not excited about getting involved early on with this project. So to judge the program as a near-term failure as an application is somewhat fair, but also largely misses the point. The ALV was something different than the other two applications: an extreme pull on the DARPA technology base. And, in that, the program did a much better job. A large sum of money and energy may have been spent non-optimally pursuing the Martin Marietta work when it was the prime contractor. But DARPA was also relatively quick to redirect the portfolio after several years of watching its broad array of contractors work and assessing their approaches and their results. In the end, CMU proved to be better suited to help push the vision area of the portfolio forward than Martin and the ALV. Fortunately, DARPA was light on its feet in making that change in strategy happen.